Can outdated Webview2 versions and unstable drivers cause Microsoft Teams video issues for a user? A story of how we helped a client figure out the cause to a stubborn problem!

A Stubborn Problem – Where to start

We often get questions about frustrating issues that users are having during Teams calls that admins cannot figure out. Even with admin tooling and Microsoft Teams administrator insights, issues can still be hard to diagnose. As they can be caused by any of the many moving parts making up the user experience for Teams calls. The following case is such an example of an interesting, and hard to diagnose Teams call issue. Involving several variables and highlighting the complexity of troubleshooting multiple factors potentially affecting Teams call quality.

Unmasking the Culprit

The Issue:

A customer approached us asking for help investigating a very challenging topic that had been troubling them. A growing number of their users were regularly facing issues with incoming video or screen sharing not working during calls. Instead of seeing the other party or screen, all they got was a dark screen. It would often resolve itself after several minutes but was annoying and disrupting nonetheless. As there are many factors that can cause it, this was proofing to be quite a challenge to analyze. With more and more users reporting the issue, it was also quickly becoming a major headache.

The client asked us therefore if we, with the help of TrueDEM, could assist them to identify the cause and find a solution.

Let’s start: Finding the similarities

The reported issue was not just occurring to a single user, but multiple which can be a clue. What do these users have in common that could cause it? This was easily answered as the client mentioned that they were rolling out new hardware (laptops). Most of the affected users were using the new device type. Making it a logical first step to first look into what was happening during problematic calls on such a device.

The problem happened solely on the incoming visual streams (video & screen sharing), so GPU and rendering capabilities would be important. Something that is handled by the graphic card and requires different webview2 processes like Renderer and GPU.

We selected a random call from one of the affected users, and the following immediately caught our attention:

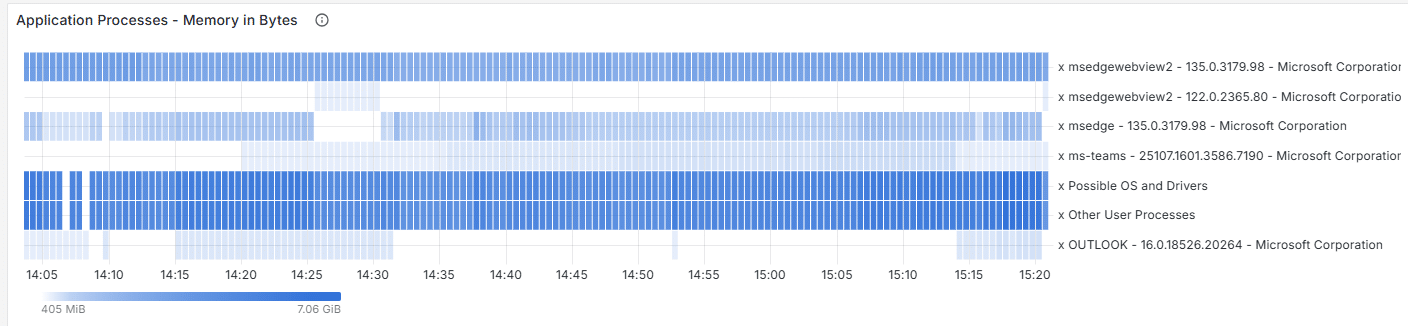

First clue – Running processes

During the call, not one, but two webview2 processes were running with two completely different versions. Version 135.0.3179 was the evergreen version at that time and was the one which the Teams client was using. Which you can see as it’s the consecutively used webview2 process throughout the call. But apparently there was another application running on the user’s device that was using an older version, namely 122.0.2365. Seeing an outdated version signals that the invoking app was not programmed to use the most recent Webview2. Instead, it used a static version of webview2 bundled into that app directly.

Shown in the following picture, for a period of 7 minutes (2:25pm – 2:32pm) this other application was using top memory Webview2 resources.

Could this be the issue?

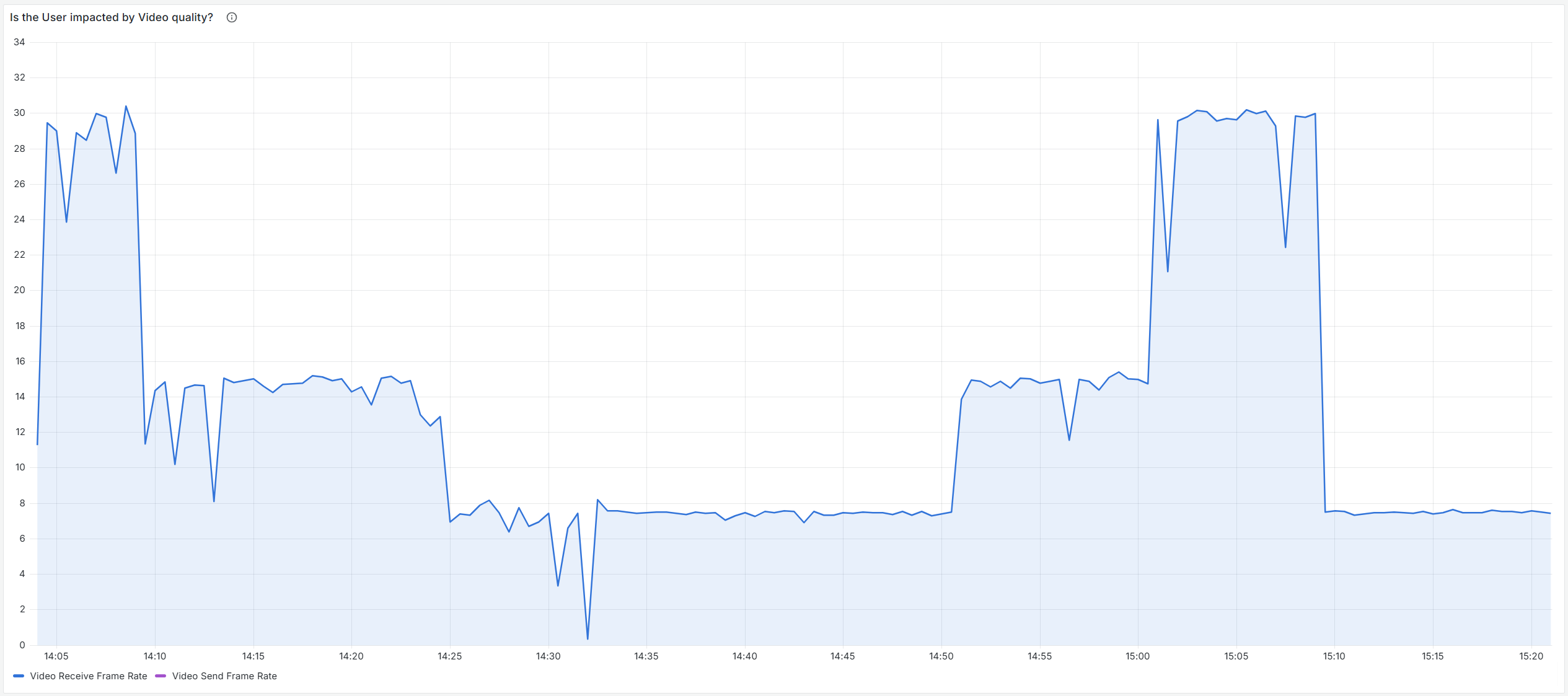

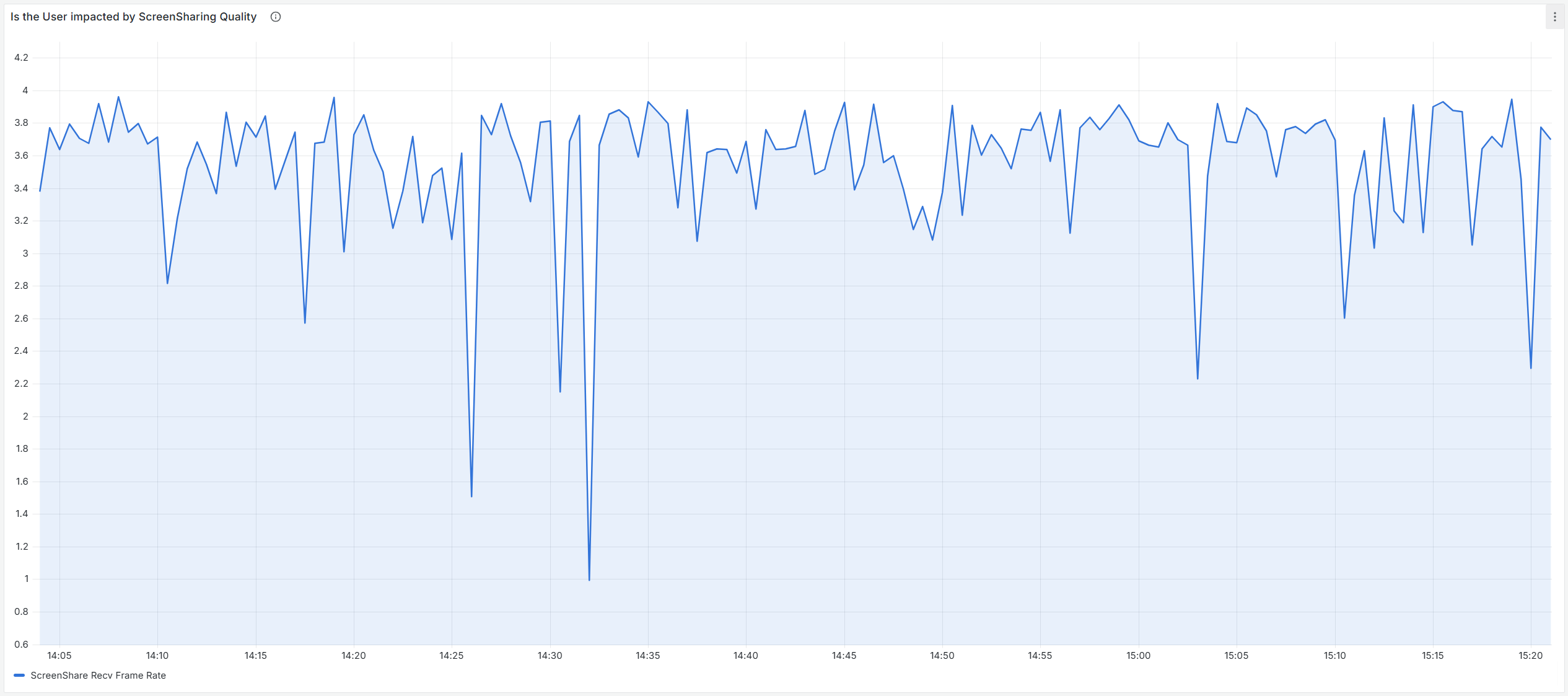

We did not know the exact moment the issue had occurred as the user had not reported on that. So, we checked to see if the running of this outdated Webview2 process coincided with something in the call that could indicate the issues. We didn’t have to look far. At the same time this process was running, the ‘Inbound Video Frames’ started showing significant drops. It even went almost down to zero at one point. Both for video as well as for video based screensharing (shown in two pictures below). A very good indicator for a serious issue!

Getting into it, what’s going on with the user’s rendering?

But does this explain our issue? Not necessarily… When multiple applications run, each runs its own isolated Webview2 process group which can use different versions. So theoretically this alone should not cause the blackouts in the Teams call. We had to dig deeper.

Next step was to look at the graphical processing unit (GPU). Each app uses their own Webview2 processes, but they do still use the same GPU and GPU driver whenever they perform rendering tasks against it. With multiple Webview2 apps contending for GPU and driver resources this can cause an overload, called GPU contention. With a heavy webview2 app like Teams running simultaneously with another heavy Webview2 process, GPU contention could certainly be a factor. If GPU contention occurs to such a level where the driver becomes unresponsive, the operating system could force a restart of the GPU driver, temporarily making it unavailable. This definitely would explain a blackout on the Teams video stream.

So, the next logical step was to check if the GPU was really used in the Teams call during the issues and if so, what driver version was installed.

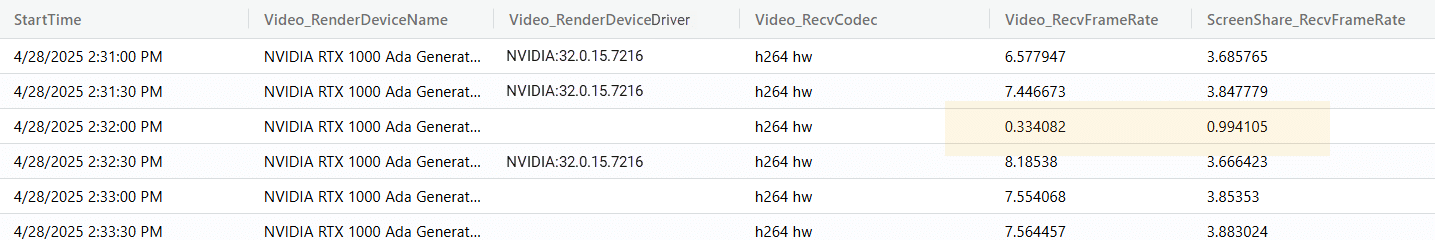

The user’s device was using NVIDIA RTX 1000 Ada Generation Laptop with the Driver v32.0.15.7216 and all the Calls where the blackout occurred indicated that h264 hardware decoding was being used. This confirmed that the physical GPU was used during the call, and that it could be interesting to look further into the GPU.

The real time insights also showed that the GPU was using the h264 hw codec for decoding, instead of the far more superior AV1 codec that this brand-new device with the NVIDIA card should be able to support.

Getting closer… The final clue

The fact that the GPU driver was not using the AV1 codec provided a good indicator that the driver of the NVIDIA chipset might be outdated. Checking with the other calls where users reported Teams video issues, we saw the same findings, creating a clear pattern.

We now have all the relevant info together, let’s look at what to do with it.

Putting the dots together – outcomes and recommendations

Based on the findings we knew the following: During the Teams calls in which users reported the issues with incoming video & screen sharing suddenly going black, we see another app using an older version Webview2 process around the time the users reported having issues. We also see the GPU using the less optimal h264 hw codec instead of the supported, and preferred, AV1 codec.

Investigations on public forums show that other people reported similar cases, especially with combinations of Webview2 versions below version 130.x and outdated NVIDIA drivers.

Next steps:

Handed our findings there were now two clear suspects for the problem and two potential solutions for the client to explore:

- Potential outdated driver issue: First check if the NVIDIA driver was up to date, and if not: update it.

- Potential GPU contention issue: Identify the other app that was using the static Webview2 version and research if it can be upgraded to use a more recent Webview2 version.

The first item was straight forward and was tackled by the client team almost immediately. Indeed, the NVIDIA drivers on the devices were outdated and were subsequently brought up to the correct version. Identifying the other app was also no issue but as with any software in an enterprise situation, updating would potentially be a more complex action as it requires proper planning and deployment.

Luckily, the NVIDIA driver update already caused an immediate reduction of Teams video issues being reported. Proofing the problem was indeed a driver issue.

How TrueDEM Helped Unlock the Solution

By leveraging both retrospective insights and real-time data, TrueDEM gave us a minute-by-minute look into the affected calls. This allowed us to pinpoint crucial factors that were previously hidden, such as the use of an outdated Webview2 process in another application and the specific encoding/decoding codecs being used throughout the calls. These critical clues ultimately led to the root causes: an outdated driver and/or GPU contention.

With this newfound clarity, TrueDEM was able to provide the client with clear insights and a direct path to resolution. A path that allowed them to proactively fix the problem for every user who had or was going to get the new device and reduce future incidents.